Agent as an interface

Interface powered by frontier intelligence

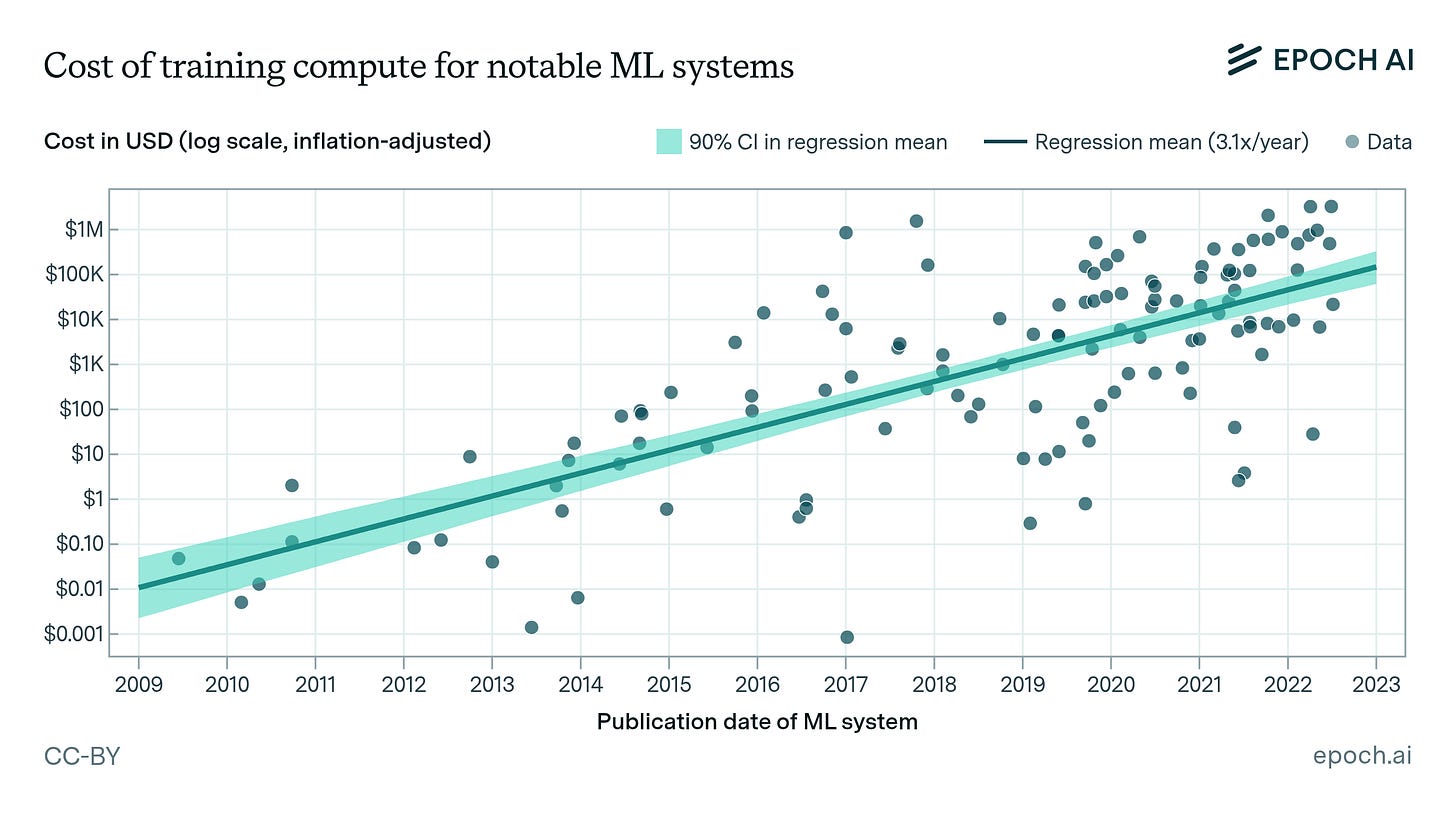

Over the past couple of years, training costs have been increasing exponentially, while the utility of AI tools has been diminishing. There is a dissonance between the improvements in benchmark scores of frontier models and the tasks that AI tools can solve for consumers today.

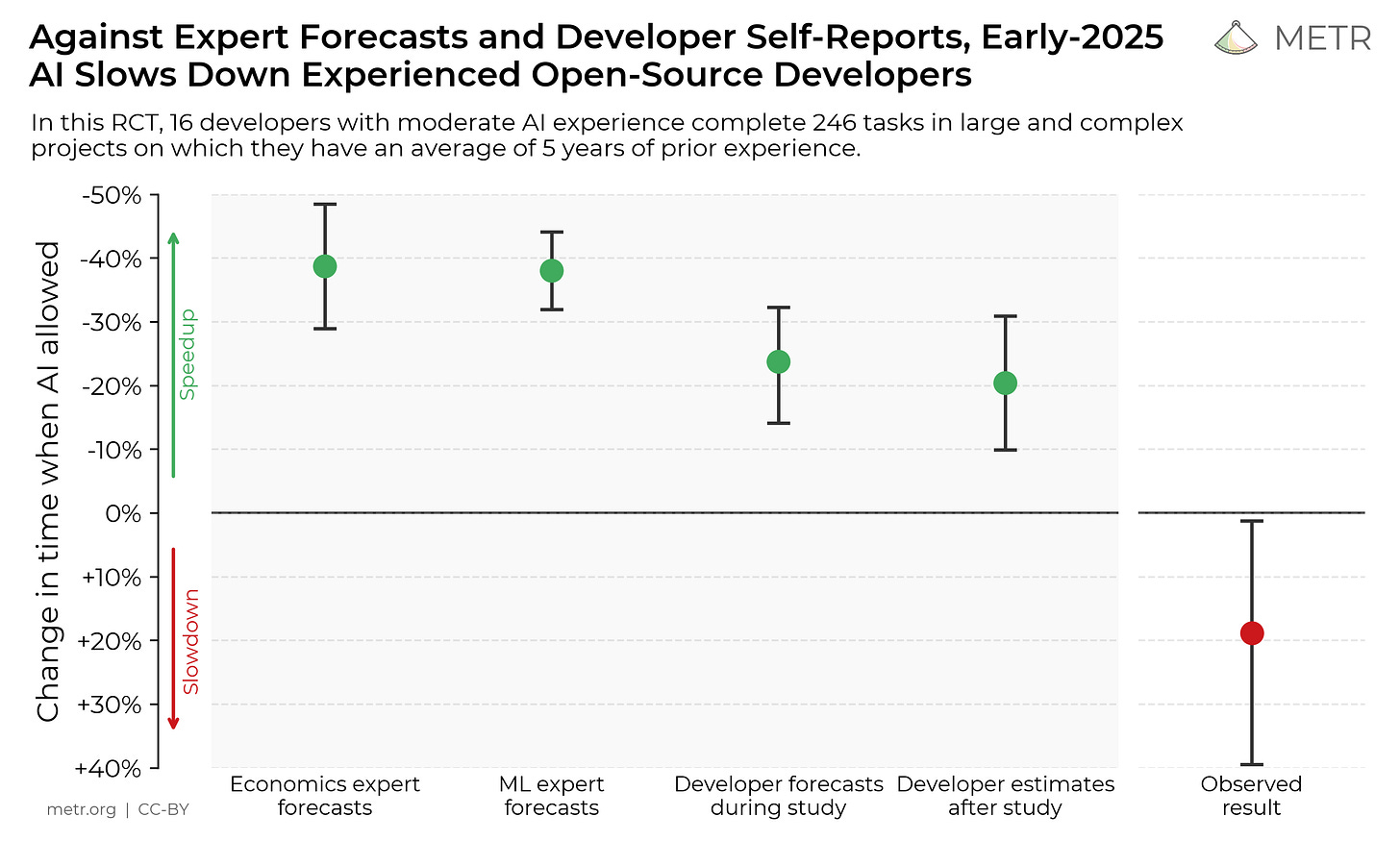

In the case of AI coding tools, a 2025 METR study found that people are about 19% slower with them than without. Factors in this include the review burden or time spent babysitting AI (time spent reviewing or fixing AI code/time spent writing prompts), technical complexity accumulation, and silent regression rate. The amnesic, brown-nosing experience of current tools limits the effectiveness of the human-AI relationship in high-context settings.

I propose that to get the most out of these costly models, an intelligent interface is needed. This intelligence needs to adapt to the user’s skill level, previous experience, domain, task, device, and other factors to build the optimal interface for the user to accomplish their goal.

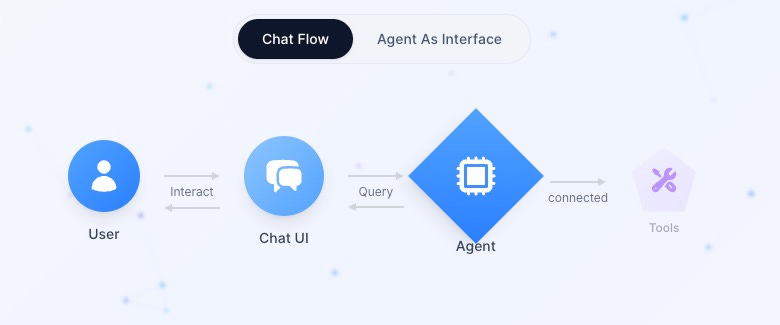

Today’s AI tools are largely extensions of pre-2020 workflows, simply appending a chat window or IDE plugin to existing applications. These are effective, but feel old. Let’s take software development as one of the areas with the most innovation in AI tooling, for example. Cursor, Antigravity, and GitHub Copilot all run on Visual Studio Code, an editor that launched in 2016, and it is also the key dependency that competitors of GitHub Copilot have. Claude code, codex cli, and warp are all based on your terminal, and while Claude code is my tool of choice, the terminal is an old interface. Lovable, Superblocks, Bolt, and Replit are AI app builders that take a no-code approach to building. There's a lot of innovation in their interfaces, but chat remains a key modality, and they're not targeted at experienced developers.

In the current landscape, there isn’t a fresh, new interface for experienced developers to accelerate their development. Desktop tools are limited by their own success; their existing efficiency makes radical change difficult, but mobile presents the opportunity to reimagine the interface. We are targeting experienced mobile developers because a solution that works there will be more transferable to desktop and accessible to all skill levels.

Speaking with experienced developers across the industry, I found that precise enterprise-level code changes, especially in languages like C++, are not yet reliable with AI today. Many experienced developers can’t envision a mobile interface they would use instead of their laptop. At most tech companies, even if the hours are 9-5 or even 9-9-6, the system doesn’t sleep. A ping could come in at any moment, and for many people, work-life balance is a struggle. At Microsoft, countless peers' weekends, post-work dinners, vacations, and general free time were interrupted by pings or tasks. To avoid this fate, my phone became the tool to unlock my free time. I would plan my builds and tests around cooking dinner, or take my phone to the gym and use the code explorer to help solve different issues, or even write code, so the result would be ready later. All of these interfaces sucked. Coding on your phone doesn’t work, but with the right interface, software engineers will be more productive and won’t have their life interrupted by using their laptop for work.

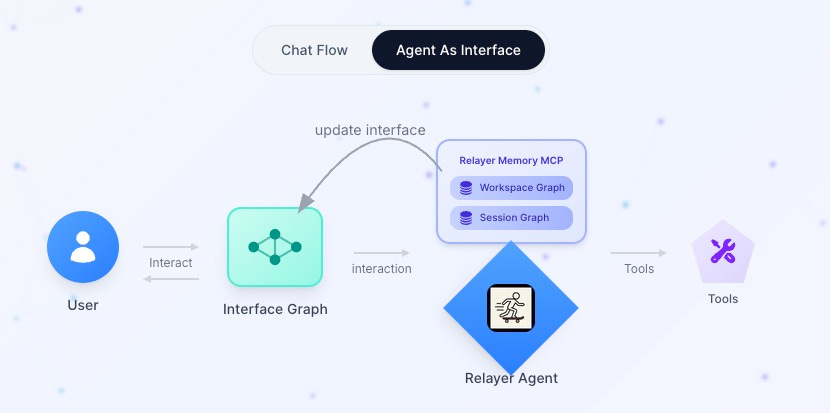

This is why we are building the Relayer Agent: the agent that builds an interface that adapts to the user and represents their workspace and conversation as a graph. Instead of relying on the static chat window where users must craft the perfect prompts for context, the agent chooses the optimal UX for each layer and state in the graph. This approach creates ‘implied context,’ allowing for complex workflows even on constrained devices. This agent powers the Relayer App, which lets you code anywhere. By giving the agent full control over the interface, users can navigate, edit, and review code using AI with laptop-like precision while eliminating the friction of chat.

The Workspace and Conversation Graph are MCP servers that handle the agent emitting a graph delta. They decide which graph to write to, which parts of the graph to send to the frontend, and how to handle user interactions on different nodes. During the reasoning, we use standard search tools like keyword, faiss, and by node location in the filesystem/conversation. This technique converts the agent from a conversation-based to an action-based approach, acting as an interface as it manages context across large codebases and complex tasks.

The dynamic interface breaks down the codebase layer by layer, ranging from a macro-scale (architecture) to a micro-scale (raw code), and including a middle ground (pseudo-code).